EVROPA: an experiment in VR

Diving headfirst into Unity & the Google Cardboard SDK and coming out the other side with a strange underwater VR experience.

What can I make with Cardboard?

What now seems like ages ago, I received a Cardboard viewer from a friend working at Google. After borrowing an old Android and checking out the Arctic Journey demo, I immediately got excited to start on a project of my own.

Some rejected ideas (and please, feel free to steal any of them) included:

- Time Jumping

Use Street View and the phone’s GPS coordinates to show present-day locations, then introduce the ability to jump forward or back in time to the same location generated by historical footage and/or CGI. - ReplicaFlat

Flats in Hong Kong are tiny. What if you had a VR replica of your flat that you could decorate any way you want? Double the decoration, double the fun! - Rock Sim

Like Mountain, but even more boring and in VR. - DiVR

You’re descending into the ocean in a diving bell and can only look around, but there’s always something in your peripheral vision you can’t quite make out… - Choose Your Own AdVRnture

An on-rails story, advance the plot or change the environment based on where you gaze is pointed.

Eventually, I settled on a jellyfish simulation, as I’ve always had a bit of fascination with the deep sea, and strapping a virtual camera on top of such a simple, floating life-form seemed to fit the bill for a first VR game.

Building off this concept, I came up with the following parameters for the game:

- Food is floating all around and you must target the pieces with your reticle, then tap to eat them

- If you eat too little, you starve to death; if you eat too much, you explode

- “Win” by finding another jellyfish with whom to mate

Soon, though, I realised that getting an animated, 3D-modelled jellyfish up to my standards of quality in Unity would require more time than I’d have liked to spend, so I sat down for a rethink and instead came up with the idea of a submarine adventure. Now, our story is as follows:

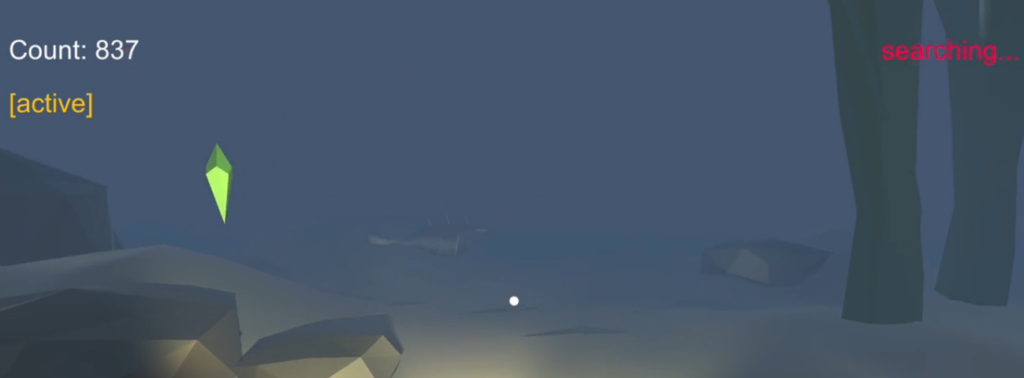

EVROPA. You are a remote technician working on the Jovian moon Europa. An exploratory sub has unexpectedly been incapacitated. Take control of it, make sure to keep it powered up by collecting the energy crystals, and try to find out what damaged it.

Gameplay consists of looking 360º around you and focusing on the floating crystals. Pressing the Cardboard’s button on one will increase your score by 100 points, however, your score is always going down by about 50 points per second; get to 0 and you lose. To win the game, find the fish swimming around the foggy perimeter of the game area and tap on it.

Design

It felt good to dive into Cinema 4D again. I hadn’t really worked in 3D since I graduated and although I was a bit rusty, I re-acclimated quickly. As always, I wasted a lot more time trying to perfect the concept before I moved on to execution. I browsed ArtStation for hours, always constrained by the idea that there needed to be a virtual camera on top of the sub. Eventually, I realised the need to just pick something and work with it, or else the game’d never get done.

Making an ocean floor was actually way easier than I thought. Just generate a landscape object, then pull it around a bit with the magnet tool to make some mountains and valleys. For the seaweed, just clump some tall cubes together and add a bunch of different deformers. Garnish with some rocks, et voilà, finished. Background and atmospheric effects, I found, can easily be added later in Unity.

Learning Unity and Google VR on the fly

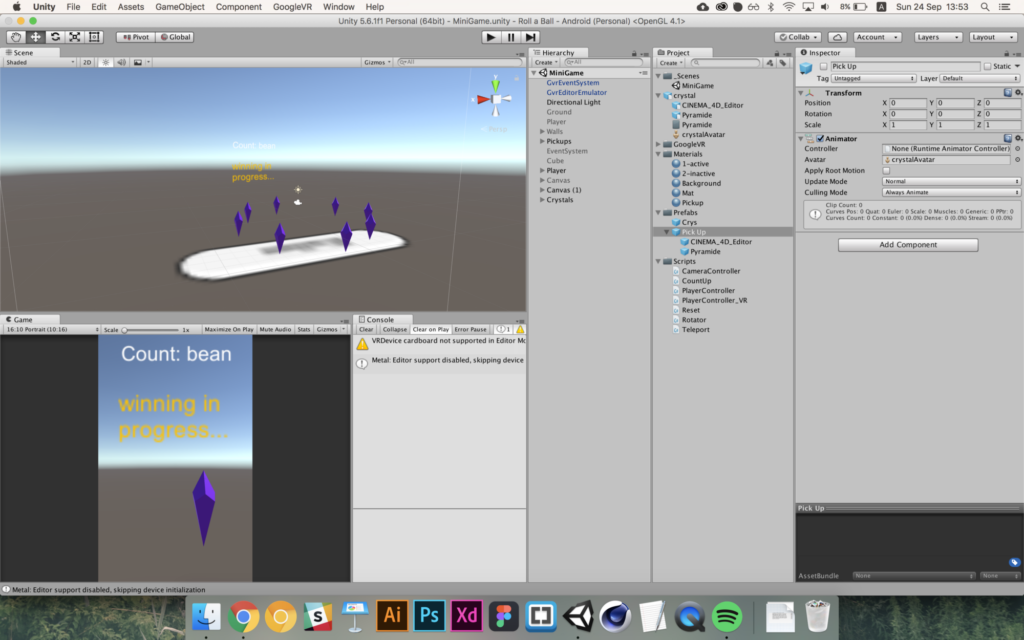

Since this was my first go at Unity (or any game engine), I spent a lot of time with the official Roll-A-Ball tutorial, as well as Todd Kerpelman’s walkthrough on how to adapt an existing game for use on a Cardboard. My biggest stumbling blocks early on were definitely:

- coming to terms with the way code and the GUI interact and connect elements within Unity

- working through/around/against the Google VR SDK

Connections in Unity

Up until now, my coding knowledge has been generally restricted to web programming, as well as a bit of Java. Although Unity has to option to write code in JavaScript format, I found that most people and tutorials prefer C#. Logic is logic, though, so it wasn’t that hard for me to figure out most of the the differences in syntax (and I really wasn’t doing anything that complex).

What did throw me for a loop, however, was how variables and elements are connected in the programme. Instead of having a single master control file, scripts are attached to the various game objects themselves. Then, instead of declaring, for example that myCube.material = blueMaterial, you declare a public class of myMaterial inside of myCube, then exit the script editor and drag the blueMaterial element from your Assets folder onto the script area of myCube’s properties. Really hard for me to get used to.

The Google VR SDK

While struggling to get the flow of Unity was understandable for a beginner, getting the SDK to work right definitely made me feel like I was in over my head.

Repeatedly.

For starters, the SDK is constantly being updated, rendering older tutorials either disorienting or useless. For example, in the above tutorial, the main object you add to your project to activate VR is GvrMain. However, in the most recent release of the SDK, that file is known as GvrEditorEmulator. It took me the better part of an hour of Googling to figure that out.

I solved most of my problems by obsessively switching back and forth between my project and Google’s demo project to see exactly how their file was laid out. Or in other cases, I simply spaghetti-coded around things that were beyond my abilities.

Solvin’ problems, one at a time

All in all, I do feel like I learned a lot, starting from nothing. So I want to share some of the problems I solved below, in case anyone else might be having similar issues. Feel free to message me if you still have any questions.

Back up often!

Good life advice, and especially good Unity advice, as not all actions can be undone. Be absolutely sure you know what you’re doing when working with prefabs. I accidentally wiped out a prefab with all its settings and attached scripts by dragging a .c4d file into it (and of course clicking “yes” in the confirm dialogue, as I thought it would just replace the geometry). Be careful!

Gaze & Raycasting

When using GVR’s gaze reticle, a canvas relatively close to the camera will block any collisions it should be detecting, unless you turn them off for the canvas.

To check collisions with the circling fish only, I cast a ray from the middle of the camera. All other objects, including the crystals, are set to ignore the raycast layer.

How do you make an object respond to a click in C#

Camera.ScreenPointToRay

if (Input.GetButtonDown(“Fire1”)) {

Ray ray = cam.ScreenPointToRay(new Vector3(cam.pixelWidth/2, cam.pixelHeight/2, 0));

Debug.DrawRay(ray.origin, ray.direction * 10, Color.yellow);

RaycastHit hit;

if (Physics.Raycast(ray, out hit)){

SetCountText ();

}

}How to make a cheap, underwater environment

- Add fog, change the colour to blue, and give it a short draw distance

- Change the scene’s light to blue and make it a point light (as if the ship is casting some subtle blue light from it’s machinery on the sand below.

- In the camera settings, change the skybox colour to solid, then play around with the colour picker until the background matches the fog

Restricting rotation to only one axis

The submarine is a child of the VR camera, but I still wanted the player to be able to look down and see it below them. Full disclosure: I have no idea how eulerAngles work.

float yRotation = vrcam.transform.eulerAngles.y;

transform.eulerAngles = new Vector3(transform.eulerAngles.x, (yRotation+180), transform.eulerAngles.z);Counteracting neck-to-eye distance

The default behaviour of a VR camera is to emulate how the human head works. So the eyeball/lens is offset from the centre of rotation by some amount. This is not ideal in my game, as I want the VR camera to behave as if it is a real camera with very little offset from the point of rotation. However, it doesn’t seem like this is a functionality that is easy to change. This thread (check “Neck Model Scale”) seems to suggest it is possible, but only by editing the code after compiling the game…

Sharp UI text

Maybe it was just the scale of my project, but UI text always seemed blurry when it was at an appropriate size. To counter this, I made the font size and the canvas’ width & height extra large, then scaled down the canvas to around 0.001.

Magical sine waves

Somehow, the line below give an interesting organic swimming motion that oscillates, speeds up, and slows down the circling fish, but I really have no idea why.

transform.rotation = Quaternion.Euler(10f * Mathf.Sin(Time.time * 3f), 0f, 0f);Post-mortem

Is this project over? I never like shutting the door completely on my projects, but I think I’ll be putting EVROPA on the shelf for the time being. Were I to dive in again, the two areas I would like to improve the most would be the in-game UI, as well as the logic & difficulty of the collection aspect.

User Interface

Right now, time-to-loss is displayed as a simple counter racing towards 0. I definitely had bigger plans for this aspect, such as simply dimming the subs lights or having some kind of mask over the camera implying that the lens is freezing over or somehow shutting down. I do believe I could figure out how to do this if I did some more research.

Logic & difficulty

The game is too easy! I know that if this game is going to be at all “fun”, a lot of work still needs to go into tweaking the logic behind the crystals’ location, movement, and respawning, as well as the actions governing the appearance of the circling fish.

Music & SFX

Of course. Always need music and effects. One of these days, I’ll start a project with only the audio and see where I go from there.

Originally published on Medium.